一、Hadoop守护进程的环境配置

管理员可以使用etc/hadoop/hadoop-env.sh脚本定制Hadoop守护进程的站点特有环境变量;另外可选用的脚本还有etc/hadoop/mapred-env.sh和etc/hadoop/yarn-evn.sh两个。通常用于配置各守护进程jvm配置参数的环境变量有如下几个:

- HADOOP_NAMENODE_OPTS: 配置NameNode;

- HADOOP_DATANODE_OPTS: 配置DataNode;

- HADOOP_SECONDARYNAMENODE_OPTS: 配置Secondary NameNode;

- YARN_RESOURCEMANAGER_OPTS: 配置ResourceManager;

- YARN_NODEMANAGER_OPTS: 配置NodeManager;

- YARN_PROXYSERVER_OPTS: 配置WebAppProxy;

- HADOOP_JOB_HISTORYSERVER_OPTS: 配置Map Reduce Job History Server;

- HADOOP_PID_DIR: 守护进程ID文件的存储目录;

- HADOOP_LOG_DIR: 守护进程日志文件存储目录;

- HADOOP_HEAPSIZE /YARN_HEAPSIZE: 堆内存可使用的内存空间上限,默认为1000G;

例如:如果要为NameNode使用parallelGC, 可在hadoop-env.sh文件中使用如下语句:

export HADOOP_NAMENODE_OPTS="-XX:+UseParallelGC"

1.下载Hadoop

官网:https://hadoop.apache.org/release/2.10.0.html

wget下载

[root@centos01 package]# wget https://archive.apache.org/dist/hadoop/common/hadoop-2.10.0/hadoop-2.10.0.tar.gz

2.配置Hadoop环境变量

解压安装包至指定目录下:

[root@centos01 ~]# mkdir -pv /bdapps/ [root@centos01 package]# tar xf hadoop-2.10.0.tar.gz -C /bdapps/ [root@centos01 package]# ln -sv /bdapps/hadoop-2.10.0/ /bdapps/hadoop ‘/bdapps/hadoop’ -> ‘/bdapps/hadoop-2.10.0/’

3.编辑配置文件/etc/profile.d/hadoop.sh

编辑此文件,定义类似如下环境变量,设定Hadoop的运行环境:

export HADOOP_PREFIX="/bdapps/hadoop"

export PATH=$PATH:$HADOOP_PREFIX/bin:$HADOOP_PREFIX/sbin

export HADOOP_PREFIX="/bdapps/hadoop"

export PATH=$PATH:$HADOOP_PREFIX/bin:$HADOOP_PREFIX/sbin

export HADOOP_COMMON_HOME=${HADOOP_PREFIX}

export HADOOP_HDFS_HOME=${HADOOP_PREFIX}

export HADOOP_MAPRED_HOME=${HADOOP_PREFIX}

export HADOOP_YARN_HOME=${HADOOP_PREFIX}

4.配置Java环境

配置java环境参考琼杰笔记文档:Linux安装Tomcat服务器和部署Web应用 ,

设置JAVA_HOME变量:

[root@centos01 profile.d]# cat /etc/profile.d/java.sh export JAVA_HOME=/usr/java/jdk1.8.0_191-amd64

二、创建运行Hadoop进程的用户和相关目录

1.创建用户和组

处于安全等目的,通常需要用特定的用户来运行hadoop不同的守护进程,例如,以hadoop为组,分别用三个用户yarn, hdfs和mapred来运行相应的进程。

[root@centos01 ~]# groupadd hadoop [root@centos01 ~]# useradd -g hadoop yarn [root@centos01 ~]# useradd -g hadoop hdfs [root@centos01 ~]# useradd -g hadoop mapred

2.创建数据和日志目录

Hadoop需要不同权限的数据和日志目录,这里以/data/hadoop/hdfs为hdfs数据存储目录。

[root@centos01 ~]# mkdir -pv /data/hadoop/hdfs/{nn,snn,dn}

[root@centos01 ~]# chown -R hdfs:hadoop /data/hadoop/hdfs/

然后,在hadoop的安装目录中创建logs目录,并修改hadoop所有文件属主和属组:

root@centos01 ~]# cd /bdapps/hadoop [root@centos01 hadoop]# mkdir logs [root@centos01 hadoop]# chmod g+w logs [root@centos01 hadoop]# chown -R yarn:hadoop ./*

三、配置Hadoop

1.编辑配置文件etc/hadoop/core-site.xml

core-site.xml全局配置文件包含了NameNode主机地址以及其监听RPC端口等信息,对于伪分布式模型的安装来说,其主机地址为localhost,NameNode默认使用的RPC端口为8020,其简要的配置内容如下所示:

<configuration>

<property>

<name>fs.default.name</name>

<value>hdfs://localhost:8020</value>

<final>true</final>

</property>

</configuration>

2.编辑配置文件etc/hadoop/hdfs-site.xml

hdfs-site.xml主要用于配置HDFS相关的属性,例如复制因子(即数据块的副本数)、NN和DN用于存储数据的目录等。数据块的副本数对于伪分布式Hadoop应该为1,而NN和DN用于存储的数据的目录伪前面步骤中专门为其创建的路径。另外,前面步骤中也为SNN创建了相关的目录,这里也一并配置其为启动状态。

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:///data/hadoop/hdfs/nn</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:///data/hadoop/hdfs/dn</value>

</property>

<property>

<name>fs.checkpoint.dir</name>

<value>file:///data/hadoop/hdfs/snn</value>

</property>

<property>

<name>fs.checkpoint.edits.dir</name>

<value>file:///data/hadoop/hdfs/snn</value>

</property>

</configuration>

3.编辑配置文件etc/hadoop/mapred-site.xml

mapred-site.xml文件用于配置集群的MapReduce framword, 此处应该指定使用yarn,另外的可用值还有local和classic。mapred-site.xml默认不存在,但有模块文件mapred-site.xml.template,只需要将其复制mapred-site.xml即可。

[root@centos01 hadoop]# cp mapred-site.xml.template mapred-site.xml

配置mapred-site.xml

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>

4.编辑配置文件etc/hadoop/yarn-site.xml

yarn-site.xml用于配置YARN进程及YARN的相关属性。首先需要指定ResourceManager守护进程的主机和监听的端口,对于伪分布式模型来说,其主机为localhost,默认端口为8032,;其次需要指定ResourceManager使用的scheduler,以及NodeManager的辅助服务。

<configuration>

<property>

<name>yarn.resourcemanager.address</name>

<value>localhost:8032</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address</name>

<value>localhost:8030</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address</name>

<value>localhost:8031</value>

</property>

<property>

<name>yarn.resourcemanager.admin.address</name>

<value>localhost:8033</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.address</name>

<value>localhost:8088</value>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.auxservices.mapreduce_shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.class</name>

<value>org.apache.hadoop.yarn.server.resourcemanager.scheduler.capacity.CapacityScheduler</value>

</property>

</configuration>

5.编辑环境变量配置文件etc/hadoop/hadoop-env.sh和etc/hadoop/yarn-env.sh

Hadoop定义依赖到的特定JAVA环境,也可以编辑这两个脚本文件,为其JAVA_HOME取消注释并配置合适的值即可。此外,Hadoop大多数守护进程默认使用的堆大小为1GB,但现实应用中,可能需要对其各类进程的堆大小做出调整,这只需要编辑此两个文件中的相关变量值即可,例如HADOOP_HEAPSIZE、HADOOP_JOB_HISTORY_HEAPSIZE、JAVA_HEAP_SIZE和YARN_HEAP_SIZE等。

6.编辑配置文件slaves文件

slaves文件存储了当前集群的所有slave节点的列表,对于伪分布式模型,其文件内容仅应该为localhost,这也的确是这个文件的默认值。因此,伪分布式模型中,此文件的内容保持默认即可。

四、格式化HDFS

在HDFS的NN启动之前需要先初始化其用于存储数据的目录。如果hdfs-site.xml中dfs.namenode.name.dir属性指定的目录不存在,格式化命令会自动创建之;如果事先存在,请确保其权限设置正确,此时格式操作会清除其内部的所有数据并重新建立一个新的文件系统。以hdfs的用户身份执行初始化命令:

[hdfs@centos01 ~]$ su - hdfs [hdfs@centos01 ~]$ hdfs namenode -format

当执行结果显示包含如下内容表示格式化成功:

...... 20/07/30 09:59:31 INFO common.Storage: Storage directory /data/hadoop/hdfs/nn has been successfully formatted. ......

hdfs命令:

[root@centos01 ~]# hdfs --help

Usage: hdfs [--config confdir] [--loglevel loglevel] COMMAND

where COMMAND is one of:

dfs run a filesystem command on the file systems supported in Hadoop.

classpath prints the classpath

namenode -format format the DFS filesystem

secondarynamenode run the DFS secondary namenode

namenode run the DFS namenode

journalnode run the DFS journalnode

zkfc run the ZK Failover Controller daemon

datanode run a DFS datanode

debug run a Debug Admin to execute HDFS debug commands

dfsadmin run a DFS admin client

dfsrouter run the DFS router

dfsrouteradmin manage Router-based federation

haadmin run a DFS HA admin client

fsck run a DFS filesystem checking utility

balancer run a cluster balancing utility

jmxget get JMX exported values from NameNode or DataNode.

mover run a utility to move block replicas across

storage types

oiv apply the offline fsimage viewer to an fsimage

oiv_legacy apply the offline fsimage viewer to an legacy fsimage

oev apply the offline edits viewer to an edits file

fetchdt fetch a delegation token from the NameNode

getconf get config values from configuration

groups get the groups which users belong to

snapshotDiff diff two snapshots of a directory or diff the

current directory contents with a snapshot

lsSnapshottableDir list all snapshottable dirs owned by the current user

Use -help to see options

portmap run a portmap service

nfs3 run an NFS version 3 gateway

cacheadmin configure the HDFS cache

crypto configure HDFS encryption zones

storagepolicies list/get/set block storage policies

version print the version

Most commands print help when invoked w/o parameters.

五、启动Hadoop

Hadoop2的启动等操作可以通过其位于sbin路径下的专用脚本运行:

- NameNode: hadoop-daemon.sh (start|stop) namenode

- DataNode: hadoop-daemon.sh (start|stop) datanode

- Secondary NameNode: hadoop-daemon.sh (start|stop) secondarynamenode

- ResourceManager: yarn-daemon.sh (start|stop) resourcemanager

- NodeManager: yarn-daemon.sh (start|stop) nodemanager

1.启动HDFS服务

HDFS有三个守护进程:namenode、dataname和secondarynamenode, 它们都可通过hadoop-daemon.sh脚本启动或停止,以hdfs用户执行相关命令即可:

[root@centos01 sbin]# su - hdfs Last login: Sat Aug 1 17:10:05 CST 2020 on pts/0 [hdfs@centos01 sbin]$ hadoop-daemon.sh start namenode starting namenode, logging to /bdapps/hadoop/logs/hadoop-hdfs-namenode-centos01.out [hdfs@centos01 sbin]$ hadoop-daemon.sh start datanode starting datanode, logging to /bdapps/hadoop/logs/hadoop-hdfs-datanode-centos01.out [hdfs@centos01 sbin]$ hadoop-daemon.sh start secondarynamenode starting secondarynamenode, logging to /bdapps/hadoop/logs/hadoop-hdfs-secondarynamenode-centos01.out

以上三个命令均在执行完成后给出了一个日志信息保存指向的信息,但是,实际用于保存日志的文件是以“.log”为后缀的文件,而非以“.out”结尾。可通过日志文件中的信息来判断进程启动是否正常完成。如果所有进程正常启动,可通过jdk提供的jps命令来查看java的进程状态:

[hdfs@centos01 sbin]$ jps 6817 SecondaryNameNode 7074 DataNode 7129 Jps 6700 NameNode

2.启动YARN服务

YARN有两个守护进程:resourcemanager和nodemanager,它们都可以通过yarn-daemon.sh脚本启动或停止,以yarn用户执行相关的命令即可,如下:

[root@centos01 profile.d]# su - yarn [yarn@centos01 sbin]$ yarn-daemon.sh start resourcemanager starting resourcemanager, logging to /bdapps/hadoop/logs/yarn-yarn-resourcemanager-centos01.out [yarn@centos01 sbin]$ yarn-daemon.sh start nodemanager starting nodemanager, logging to /bdapps/hadoop/logs/yarn-yarn-nodemanager-centos01.out

通过jps命令查看java进程状态:

[yarn@centos01 ~]$ jps 8625 NodeManager 8532 ResourceManager 9231 Jps

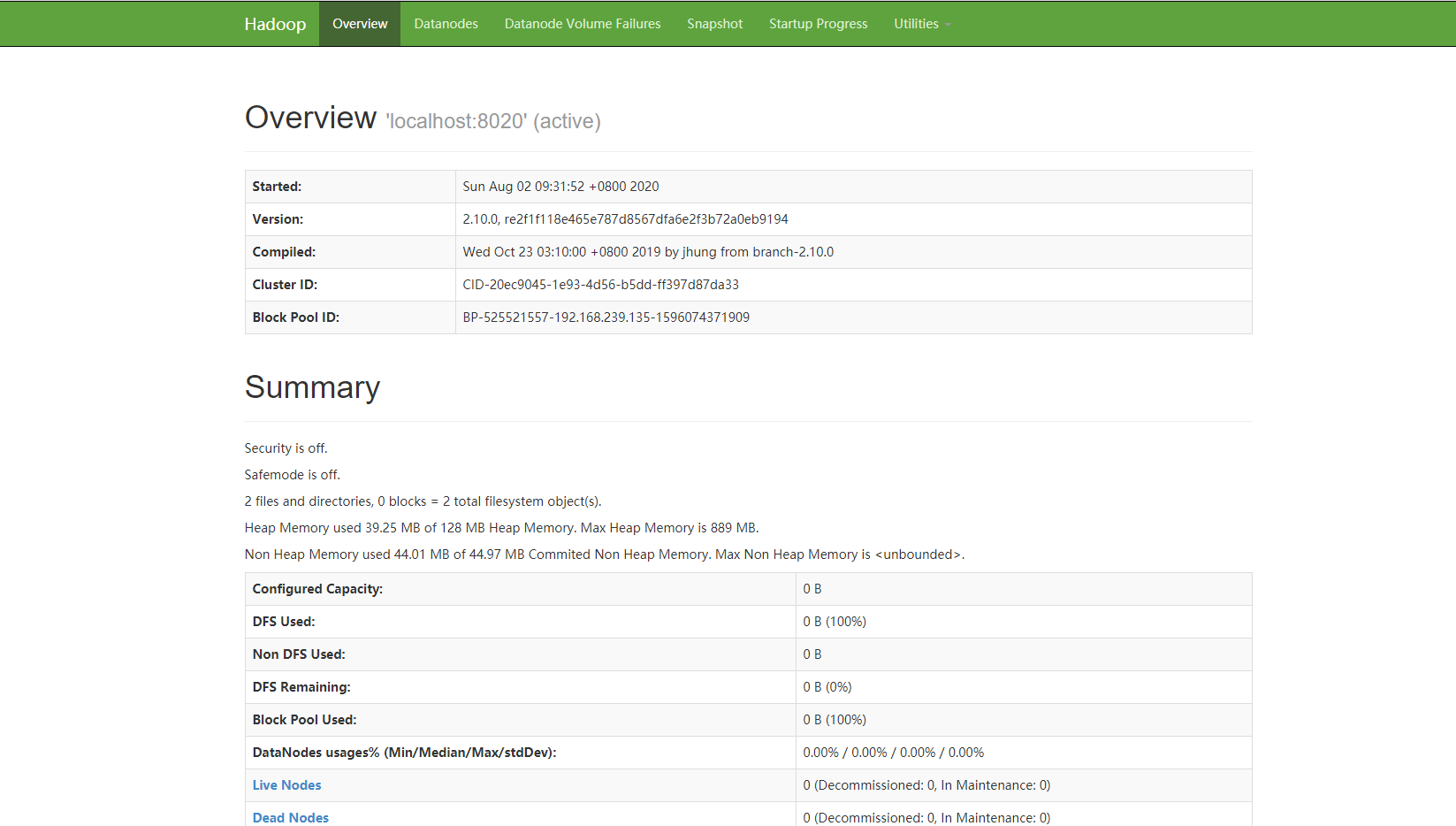

六、Web UI概览

HDFS和YARN ResourceManager各自提供了一个Web接口,通过这些接口可检查HDFS集群即YARN集群的相关状态信息,它们的访问接口如下,实际使用中,需要将NameNodeHost和ResourceManagerHost分别改为其相应的主机地址:

- HDFS-NameNode: http://<NameNodeHost>:50070/

- YARN-ResourceManager: http://<ResourceManagerHost>:8088/

注意:yarn-site.xml文件中yarn.resourcemanager.webapp.address属性的值如果定义为“”“localhost:8088”,则WebUI仅监听于127.0.0.1地址上的8088端口。

七、运行测试程序

Hadoop-YARN自带了许多样例程序,位于hadoop安装路径下的share/hadoop/mapreduce/目录里,其中的hadoop-mapreduce-examples可用作mapreduce程序测试。

[hdfs@centos01 ~]$ yarn jar /bdapps/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.10.0.jar An example program must be given as the first argument. Valid program names are: aggregatewordcount: An Aggregate based map/reduce program that counts the words in the input files. aggregatewordhist: An Aggregate based map/reduce program that computes the histogram of the words in the input files. bbp: A map/reduce program that uses Bailey-Borwein-Plouffe to compute exact digits of Pi. dbcount: An example job that count the pageview counts from a database. distbbp: A map/reduce program that uses a BBP-type formula to compute exact bits of Pi. grep: A map/reduce program that counts the matches of a regex in the input. join: A job that effects a join over sorted, equally partitioned datasets multifilewc: A job that counts words from several files. pentomino: A map/reduce tile laying program to find solutions to pentomino problems. pi: A map/reduce program that estimates Pi using a quasi-Monte Carlo method. randomtextwriter: A map/reduce program that writes 10GB of random textual data per node. randomwriter: A map/reduce program that writes 10GB of random data per node. secondarysort: An example defining a secondary sort to the reduce. sort: A map/reduce program that sorts the data written by the random writer. sudoku: A sudoku solver. teragen: Generate data for the terasort terasort: Run the terasort teravalidate: Checking results of terasort wordcount: A map/reduce program that counts the words in the input files. wordmean: A map/reduce program that counts the average length of the words in the input files. wordmedian: A map/reduce program that counts the median length of the words in the input files. wordstandarddeviation: A map/reduce program that counts the standard deviation of the length of the words in the input files.

例如,运行pi程序,用Monte Carlo方法估算Pi(π)值。pi命令有两个参数,第一个参数是指要运行map的次数,第二个参数是指每个map任务取样的个数,而这两个数相乘即为总的取样数。

真分布式模型的安装部署参考琼杰笔记文档:

琼杰笔记

琼杰笔记

评论前必须登录!

注册